Checklist for Labeling AI-Generated Videos

AI-generated videos must be labeled to meet platform rules, avoid penalties, and maintain viewer trust. Platforms like YouTube enforce strict disclosure policies for synthetic or altered content, especially for sensitive topics like health, elections, or finance. Starting in 2025, creators must clearly disclose AI use to prevent misleading viewers. The EU AI Act, effective August 2026, adds global legal requirements for transparency.

Key Points:

- Who needs to label? Both AI tool providers and content creators.

- What requires labels? Videos with realistic AI-generated elements (deepfakes, cloned voices, synthetic scenes).

- What doesn’t require labels? Minor edits (noise reduction, grammar checks) or clearly fictional content.

- How to label? Use platform tools like YouTube’s "Altered content" checkbox or manual disclaimers.

- Penalties for non-compliance: Fines up to $16.3M or 3% of global revenue under the EU AI Act.

Creators should review their content, document AI usage, and monitor platform updates to ensure compliance.

Should You Label AI-Generated Content? Let's Talk About This

Know Your Legal Requirements

Beyond YouTube's guidelines, the EU AI Act introduces strict transparency rules starting August 2, 2026. These regulations apply globally. If your content reaches viewers in the European Union, you’re required to follow these rules, no matter where you’re located. These legal standards work alongside platform policies to set clear expectations for how AI-generated content should be identified.

Who Needs to Label Their Content

The law identifies two key groups responsible for compliance:

- Providers: These are companies that develop AI tools, like OpenAI, Adobe, or Midjourney. They must embed machine-readable markers - such as watermarks, metadata, or cryptographic hashes - into every AI-generated output.

- Deployers: This includes individuals or organizations using these tools to create and share content. If you’re a creator using AI to produce videos, you’re required to disclose their artificial origin, even if the tools themselves include technical markers.

What Types of Videos Require Labels

Not all AI-assisted edits need to be labeled. The law focuses on "deep fakes" - content that closely mimics real people, places, events, or objects, and could deceive a reasonably attentive viewer. For example, if your AI-generated video includes realistic-looking individuals, locations, or events that could be mistaken for genuine footage, labeling is mandatory.

However, basic editing tools that don’t significantly alter the meaning of your content - like noise reduction, beauty filters, or grammar corrections - are exempt. On the other hand, using AI to clone voices, generate lifelike scenes, or manipulate footage of real events requires clear disclosure.

Here’s how Article 50 of the EU AI Act frames it:

"Providers of AI systems, including general-purpose AI systems, generating synthetic audio, image, video or text content, shall ensure that the outputs of the AI system are marked in a machine-readable format and detectable as artificially generated or manipulated." – Article 50, EU AI Act

For works that are artistic, satirical, or clearly fictional, the rules are somewhat relaxed. You still need to disclose AI use, but it should be done in a way that doesn’t detract from the viewer’s experience.

Deadlines and Penalties

The EU AI Act’s transparency rules officially take effect on August 2, 2026. However, general-purpose AI systems capable of generating synthetic content face an earlier compliance deadline of May 2025. Failing to comply can lead to severe penalties - up to $16.3 million or 3% of a company’s total global revenue from the previous year, whichever is higher. Even individual creators are at risk of fines if they fail to label AI-generated content that reaches EU audiences.

A voluntary Code of Practice, expected by June 2026, will provide detailed instructions for compliance. Until then, creators should disclose AI use at the earliest point where viewers encounter their content.

Pre-Production: Evaluate Your Content

Evaluating the role of AI in your video production is crucial for staying transparent and compliant with regulations. Before diving into production, ask yourself a few key questions: How much AI is involved? Could viewers mistake it for real footage? How are human and AI elements blended? These considerations will help you categorize AI usage and decide on proper labeling, aligning with the legal guidelines mentioned earlier.

Determine How Much AI You're Using

Start by identifying the extent of AI's involvement in your content:

- Fully AI-generated content: If your video is almost entirely created by AI with minimal human input, disclosure is almost always required.

- Human-edited AI content: Content that has undergone significant human changes, like rewriting or adding original elements, may have more flexible labeling rules.

- AI-assisted content: When AI is used for minor tasks, such as grammar checks or color correction, labeling is generally unnecessary.

Pay close attention to AI-generated audio (like voiceovers or music) and visuals (such as deepfakes or synthetic scenes) to determine if a label is needed. Keep track of the tools you use for visuals, audio, and scripts to ensure consistent disclosure later. Some tools, like Adobe Firefly, embed metadata ("C2PA") into files, which platforms like Meta and TikTok can detect and may automatically label as AI-generated, even if you don’t manually disclose it. Once you've categorized your AI usage, consider whether viewers might misinterpret the content as authentic.

Check If Viewers Might Think It's Real

Ask yourself: Could an average viewer mistake this for real footage? YouTube warns:

"It can be misleading if viewers think a video is real, when it's actually been meaningfully altered or synthetically generated to seem realistic."

This is important because 94% of consumers believe all AI-generated content should be disclosed, and 82% support warning labels for AI-generated content depicting people saying things they didn’t actually say.

Content that is clearly unrealistic - like someone riding a unicorn or cartoonish animation - usually doesn’t need a label. However, if your video touches on sensitive topics like health, elections, finance, or conflicts, stricter standards apply. Platforms often use more prominent labels for these high-stakes categories.

Label Mixed Human and AI Content

If your video blends human-created footage with AI-generated elements, disclosure is necessary only if the AI portions are "meaningfully altered" or "synthetically generated" in a way that could appear real. For example, if you include AI-generated B-roll, such as a synthetic drone shot of a real city, viewers might assume it’s authentic. In such cases, you should disclose its AI origin.

On the other hand, AI used purely for productivity - like generating scripts, brainstorming ideas, or adding subtitles - doesn’t require disclosure. Similarly, minor cosmetic enhancements, such as color grading or background blur applied to human-created footage, are exempt from labeling. However, if you clone someone else's voice for a voiceover, you must enable the "Altered content" setting. Cloning your own voice for dubs, though, doesn’t require disclosure.

sbb-itb-94859ad

How to Label Your Videos

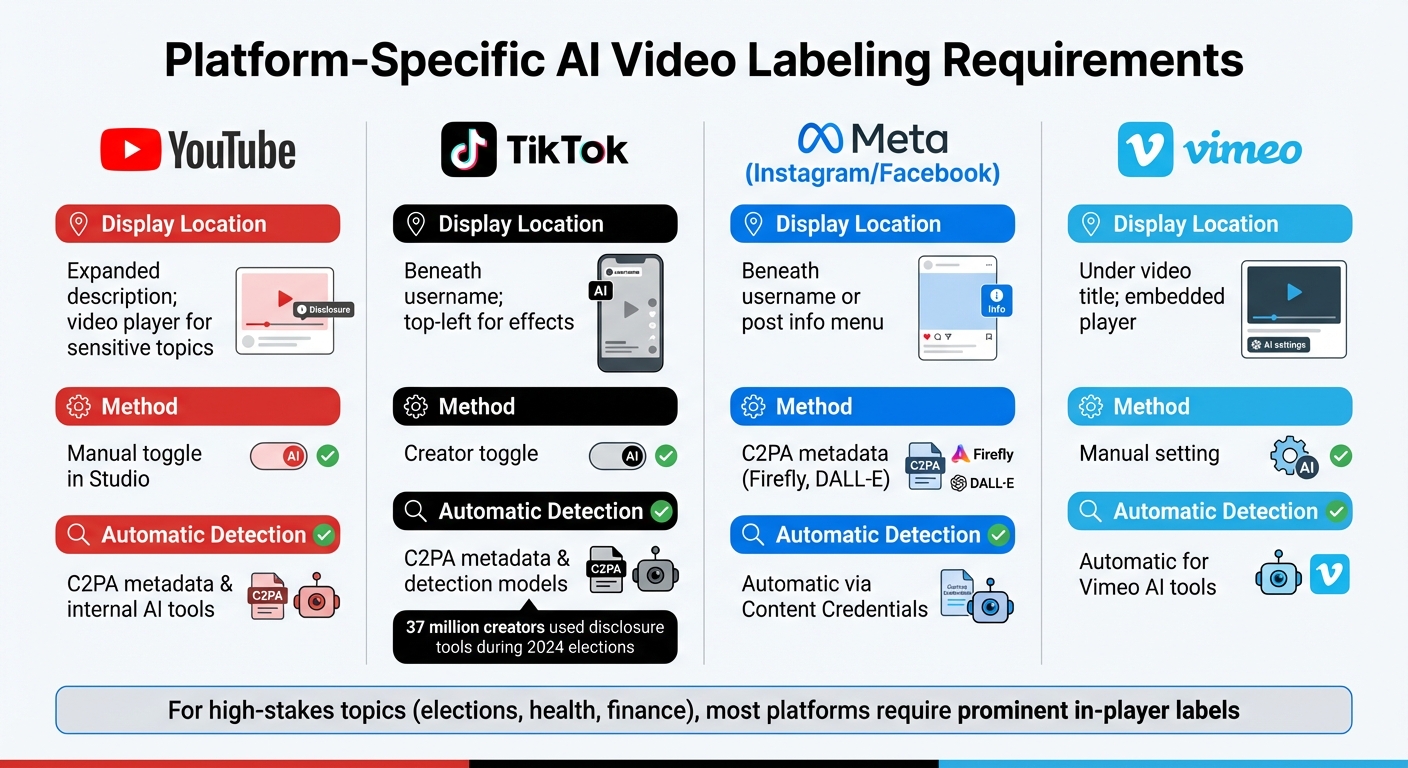

AI-Generated Video Labeling Requirements by Platform: YouTube, TikTok, Meta, and Vimeo

When it comes to disclosing AI usage in your videos, clarity is key. Here's how you can effectively label your content.

Choose Your Labeling Method

Many platforms offer built-in tools to help creators disclose AI usage. For instance:

- YouTube provides an "Altered content" checkbox in YouTube Studio, which adds a standardized "Altered or synthetic content" banner to your video.

- TikTok includes an "AI-generated content" toggle, placing a badge beneath your username.

If you're using tools like Adobe Firefly or DALL-E, they embed C2PA (Coalition for Content Provenance and Authenticity) metadata directly into your files. This metadata aligns with platform-specific rules, ensuring compliance.

For audio content, you can add disclaimers directly in the audio track, such as, "This voiceover was generated using AI." For video, on-screen text overlays or opening disclaimers work well, especially on platforms where metadata might not carry over.

Follow Platform Rules

Once you've chosen your labeling method, make sure it aligns with the specific rules of each platform. Here's a quick breakdown:

| Platform | Display Location | Method | Automatic Detection |

|---|---|---|---|

| YouTube | Expanded description; video player for sensitive topics | Manual toggle in Studio | C2PA metadata & internal AI tools |

| TikTok | Beneath username; top-left for effects | Creator toggle | C2PA metadata & detection models |

| Meta (IG/FB) | Beneath username or post info menu | C2PA metadata (Firefly, DALL-E) | Automatic via Content Credentials |

| Vimeo | Under video title; embedded player | Manual setting | Automatic for Vimeo AI tools |

Platforms like YouTube have found that "altered or synthetic" banners can slightly reduce click-through rates but significantly boost trust among viewers who are concerned about AI risks. TikTok saw 37 million creators use disclosure tools during the 2024 elections. Similarly, Meta's research shows that posts labeled with "AI Info" often receive more scrutiny in the comments.

For high-stakes topics - such as elections, health, or finance - many platforms require prominent labels directly on the video player, particularly for sensitive content. YouTube, for example, doesn’t penalize monetization or reach when altered content is correctly disclosed.

Make Labels Clear Without Disrupting Videos

Once you’ve met the platform's rules, focus on integrating labels in a way that doesn’t detract from the viewing experience. For general content, use tools like YouTube’s expanded description area. For more critical topics, consider in-player banners to ensure visibility.

If you're sharing content across multiple platforms, it’s wise to use a combination of methods. For example, activate the platform’s native toggle while also adding a manual caption, such as "Created with AI". This ensures the disclosure remains visible, even if metadata is stripped during resharing.

Keep your labels straightforward. Terms like "AI-generated" or "Synthetic content" are easy to understand and avoid unnecessary technical jargon. If you’ve used AI-enabled software to edit human-made content, re-encode the file to remove outdated AI metadata. This prevents mislabeling and reassures viewers, further strengthening trust through transparency.

Document and Monitor Your Content

After publishing, it’s important to track your AI usage to safeguard against audits and adapt to any changes in platform rules. While planning is crucial, ongoing documentation strengthens your efforts to remain transparent.

Keep Records of AI Usage

Maintain a detailed log for every video, including its title, the AI tools you used, the elements they impacted, disclosure status, and your reasoning. A simple spreadsheet or a tool like Notion can help streamline this process. This record not only demonstrates your good-faith efforts but also provides evidence if a platform challenges your labeling decisions or if regulatory inquiries arise.

For cases where you decide a video doesn’t need a label because it isn’t realistic enough, document your reasoning clearly. If you work with a team, assign someone as a compliance manager to ensure consistency by using a standardized checklist. Additionally, take screenshots of your disclosure settings to create a verifiable record of compliance over time.

| Record Category | Specific Data to Maintain | Purpose |

|---|---|---|

| Tool Inventory | Names of AI software (e.g., Midjourney, ElevenLabs) | Legal protection and tracking of tools |

| Content Map | Timestamps or descriptions of AI-altered segments | Ensure accurate labeling and audit readiness |

| Decision Log | Reasoning for "Realistic" vs. "Non-realistic" classification | Defend against potential guideline strikes |

| Evidence | Screenshots of disclosure settings | Proof of compliance with platform rules |

| Human Oversight | Records of manual edits and creative input | Preserve monetization eligibility |

Update Labels When Rules Change

Platform policies are constantly evolving. For instance, YouTube updated its disclosure rules in mid-2025. When such updates occur, make sure to adjust your labels retroactively. YouTube Studio even allows you to edit the "Altered or synthetic content" toggle after a video has been published.

Stay on top of these changes by following official sources like YouTube Creator Insider or platform blogs, and save any relevant communications. If a platform adds a label to your content automatically, review it for accuracy and appeal the decision if necessary. This kind of vigilance matches the proactive labeling strategies discussed earlier.

Review Your Content Regularly

Set a schedule to review every 10–20 videos to ensure older content aligns with current transparency standards. Use the "Average Viewer" test: ask yourself, "If someone watched this without any context, could they reasonably believe it’s real footage?" If the answer is yes and AI was used, double-check that a label is in place.

Pay extra attention to sensitive areas like health, elections, finance, or conflicts, as platforms often enforce stricter labeling requirements for such topics. If your channel produces content frequently, consider adding a general AI disclosure to your "About" section. This lets viewers know you’re committed to ethical AI usage and compliance with platform rules.

Consistent documentation and regular reviews are essential for maintaining trust and demonstrating your dedication to transparency in AI usage.

Conclusion

Labeling your AI-generated videos isn’t just about following platform rules - it’s about protecting your channel, maintaining viewer trust, and avoiding penalties. YouTube’s mandatory disclosure tool in Creator Studio is part of stricter enforcement efforts that could lead to suspension from the YouTube Partner Program for non-compliance. Similarly, Vimeo considers misleading AI-related content a "high-severity violation", which can lead to immediate removal.

To stay ahead of these risks, make labeling AI content a routine step in your upload process. Think of it as a safeguard, not an afterthought. Before hitting "publish", apply the "Average Viewer" test: Would someone reasonably believe the video is real without additional context? If the answer is yes and AI played a role, add a clear label. This small but crucial step can help you avoid strikes, keep your monetization intact, and show your dedication to ethical content practices. As Vimeo puts it:

"Transparency in disclosing altered or synthetic content is essential to upholding trust, integrity, and responsible media practices." - Vimeo

FAQs

What happens if AI-generated videos aren’t labeled properly?

Not being upfront about AI-generated videos can mislead your audience and even break platform rules. This might result in serious consequences like having your content removed, facing account restrictions, or losing the trust of your viewers. Platforms such as YouTube and Vimeo emphasize the importance of clearly disclosing AI-generated or AI-enhanced content to maintain transparency and user confidence.

To steer clear of these problems, make sure to follow the specific guidelines of each platform when labeling AI-generated content. Being transparent not only keeps you within the rules but also strengthens your credibility with your audience.

What steps should creators take to comply with platform rules and the EU AI Act for AI-generated videos?

To meet platform rules and the requirements of the EU AI Act, creators need to clearly label AI-generated videos. This transparency helps prevent misinformation and ensures viewers understand when content is created or modified using AI. The EU AI Act specifically highlights the importance of disclosing AI involvement, a stance that aligns with the policies of platforms like YouTube and Vimeo, which encourage clear labeling to avoid misleading audiences.

Here’s how creators can stay compliant:

- Clearly label your videos: Specify whether the content is fully AI-generated or simply AI-enhanced.

- Stay informed about regulations: Keep track of updates, such as the EU’s draft Code of Practice, which offers guidance on marking AI content appropriately.

- Follow platform-specific tools and guidelines: Use any resources provided by platforms to ensure your labeling meets their requirements.

Taking these steps not only helps creators meet regulatory standards but also fosters trust with their audience and reduces the risk of penalties as rules continue to develop.

When should AI-generated content include a disclosure?

AI-generated content should always include a clear disclosure when there's a chance it might mislead viewers into thinking it represents real people, places, or events. This is crucial when the content could be mistaken for genuine footage or imagery.

Transparent labeling plays a key role in maintaining trust with your audience, especially for creators using advanced AI tools to produce videos on a large scale. Prioritizing honesty not only aligns with platform guidelines but also ensures you meet audience expectations.

Related posts

LongStories is constantly evolving as it finds its product-market fit. Features, pricing, and offerings are continuously being refined and updated. The information in this blog post reflects our understanding at the time of writing. Please always check LongStories.ai for the latest information about our products, features, and pricing, or contact us directly for the most current details.